Darwin Bicentenary Part 19: Dembski's Active Information Continued...

Characters of the Wild Web Number 11:

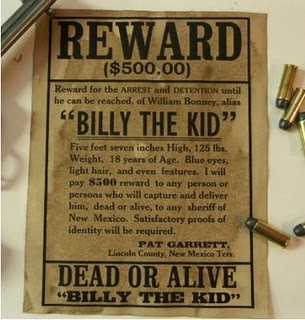

"Satisfactory Mathematical Proofs required", but Billy the DembskID is

still not wanted by the evolutionists.

Characters of the Wild Web Number 11:

"Satisfactory Mathematical Proofs required", but Billy the DembskID is

still not wanted by the evolutionists. Continuing my look at Dembski’s “active information”….

Dembski’s active information works as follows. We imagine a system where a selected outcome or selected range of outcomes has a probability of p of making an appearance. In the sort of system Dembski has in mind p is usually tiny because the class of selected outcomes is very, very small compared to the total number of possible outcomes, and also because the system is assumed to have and even distribution of probability amongst the vast number of possible cases.

We now imagine that we apply some kind of physical constraint on the system that effectively redistributes the distribution of probabilities amongst the possible outcomes so that they are no longer even. I call this a “constraint” because the system no longer has a full range of possibilities open to it; probability is being lost on certain outcomes in favour of others. Given this new distribution of probability, the probability of the selected outcome(s) of interest becomes q and we assume that q is much greater than p. The information value associated with the outcomes before and after the introduction of the constraint is –log(p) and –log(q) respectively. The system has effectively lost information, because we now know more about its outcomes and therefore their ability to provide us with information is lessened. The information has passed from the system to our heads. Thus, the loss of information to the system is –log(p) – [ –log(q)] = log (q/p). This value is called the “active information” by Dembski, and although it entails a loss of information to the system, it represents a corresponding gain in information to us as observers of that system.

In the case of Avida the probability of the XNOR gate evolving is considerably elevated by the assignment of high persistence probabilities to the components and sub components of XNOR. Hence these persistence probabilities have the effect of introducing a constraint that lowers system information in favour of observer information, thus in turn introducing active information. As have I said in the previous posts on Avida these persistence probabilities have been put in by hand, and it is in this respect that the active information has been removed from the system in order to redistribute the probabilities over the possible cases in favour of the evolved outcome.

1. Fitness Functions.

The constraint applied to a system like Avida is crucial; without it Avida would fail to produce an evolutionary result in realistic time. This constraint is often called a “fitness function”. The fitness function is itself an item that is chosen from amongst many possibilities. In his recent paper (which I hope to look at in more detail later) Dembski attempts to quantify this choice by showing that evolution of the Avida type is only highly probably because it depends in turn on the selection of a highly improbable choice of fitness function taken from a huge class of spurious possibilities. I think that Dembski’s analysis is one way of telling us that whatever way we look at it, our universe, although to us humans having apparently enormous space-time resources, is negligible in size when compared to the vast combinatorial space from which the relatively tiny class of complex cybernetic structures are taken. On this matter Dembski is almost certainly right.

2. Mathematical Constraints vs. Fitness Functions

I am not very keen on the term “fitness function”; it smacks too much of a commitment to evolution by selection. It is more general to refer to constraints; a constraint is a mathematical stipulation that redistributes probability over set of possibilities unevenly, and so as I have suggested above probability is lost on certain outcomes in favour of others. Ironically in showing us Avida Dembski is showing us how, given the appropriate constraints, an evolution of sorts can happen. But his main point is that it can’t happen without a constraint, a constraint which sufficiently alters a level distribution of probabilities in order to make the chance of evolution realistic. This point is, I feel, unassailable. But the big question for me is whether such constraints can be embodied in a succinct system of short algorithmic laws rather than be applied by hand as in the case of Avida. In real biological connections this conjectured constraint would imply “reducible complexity”, the opposite of the ID community’s “irreducible complexity”. Reducible complexity is the necessary condition needed to raise the probability of evolution to realistic levels. Evolutionists assume that this constraint is embodied in the basic laws of physics, which of course can be expressed in short algorithmic form. I suspect that ID theorists would much prefer the view that such constraints are inherently mathematically complex and therefore cannot be expressed in short algorithmic form and thus have to be put in directly by an intelligently guided hand. But then why shouldn’t the appropriate short algorithm, if such exist, also be sourced in intelligence?

3. Conservation of Information?

“You can’t create information!” is the moral boosting rallying cry of the ID community. If nothing else this slogan is very evocative. It is a hook for people who have some training in the physical sciences and who will remember the conservation laws of mass and energy. Didn’t these people suspect all along that something was missing from the physical sciences, something to do, perhaps, with that intuitively sensed property of organization? So how appropriate then that this intuitive “something” should be captured in a conservation law, thus completing a triune of conservation laws encompassing mass, energy and information. It’s all very compelling. If you say something like “Evolution breaks the law of conservation of Information” it certainly sounds as though you have just said something rigorous, scientific and unassailable. It helps raise the kudos of ID with the suggestion that a strict scientific law is at its heart. And it’s all backed up with some mathematical theorems by the brilliant William Dembski; difficult to understand perhaps but it must all make sense because he is an academic and a professor. All in all it gives the impression that at last ID supporters have rigorous science on their side and therefore something they can shout from the roof tops and be proud of.

And yet it is all rather ill defined and slippery. As I have indicated in a previous post, Shannon’s notion of information is liable to betray the ID community; it can unexpectedly appear and disappear and things that seem to have a lot of information turn out to have no information, and vice versa. It also fails to be sufficiently expressive of the surprising fact that simple to complex mathematical transformations that can take place: Although the vast majority of complex forms are algorithmically irreducible we know that a limited class, albeit a very limited class, of complex forms can arise from simple conditions and algorithms.

In the light of the foregoing are we to conclude that the ID community’s favourite mantra means anything? Actually I think it does mean something; it expresses the intuition that our universe is wholly contingent; it is the “something” in the question “Why is there something rather than nothing?” and so the cosmos comes to us with Shannon’s “surprisal” value. This intuition can be placed on a (slightly) firmer footing with a little bit of hand waving as follows: If the universe could be shown to be a logical necessity then one might interpret this as the universe having P(Universe) = 1*. But nothing we know about the universe allows us to conclude P(Universe) = 1. In fact as Dembski’s analysis suggests our particular universe has been taken from an immense class of possibilities, and from a very small class within that great space of possibilities at that. Hence, if it is meaningful to apply probabilities in this extreme outer framing context (a maneuver that is not beyond criticism) we return a P(Universe) with a value a lot less than 1. Hence –log P(U), the information in the universe, is very large and thus the information value of any outcome conditioned on U will be large. The ID community may yet have the last laugh.

Stop Press 3/6/9

William Dembski has recently posted this reaction to some of the criticism he has received regarding his “Conservation of Information”.

Footnote

*Presumably because of God’s Aseity, only P(God) = 1. But if P(God) = 1 then from Shannon’s definition of information God contains no information! This is yet another surprising result that is a product of ambiguities in the application of probability and its meaning.

0 Comments:

Post a Comment

Links to this post:

posted by <$BlogBacklinkAuthor$> @ <$BlogBacklinkDateTime$>

Create a Link

<< Home